PDF) Explaining the unsuitability of the kappa coefficient in the assessment and comparison of the accuracy of thematic maps obtained by image classification (2020) | Giles M. Foody | 87 Citations

The disagreeable behaviour of the kappa statistic - Flight - 2015 - Pharmaceutical Statistics - Wiley Online Library

The disagreeable behaviour of the kappa statistic - Flight - 2015 - Pharmaceutical Statistics - Wiley Online Library

PDF) The Kappa Statistic in Reliability Studies: Use, Interpretation, and Sample Size Requirements Perspective | mitz ser - Academia.edu

Explaining the unsuitability of the kappa coefficient in the assessment and comparison of the accuracy of thematic maps obtained by image classification - ScienceDirect

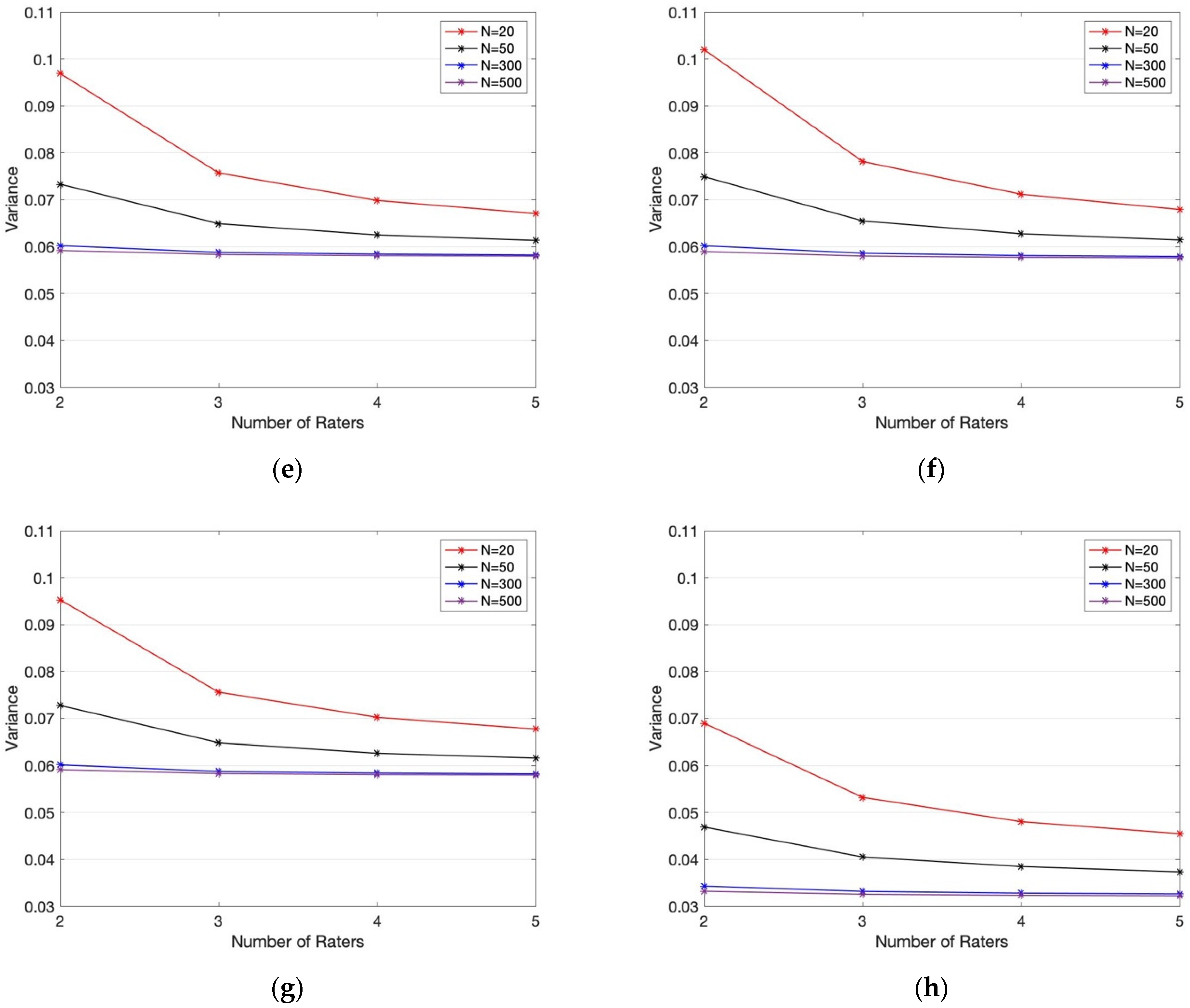

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters

Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag

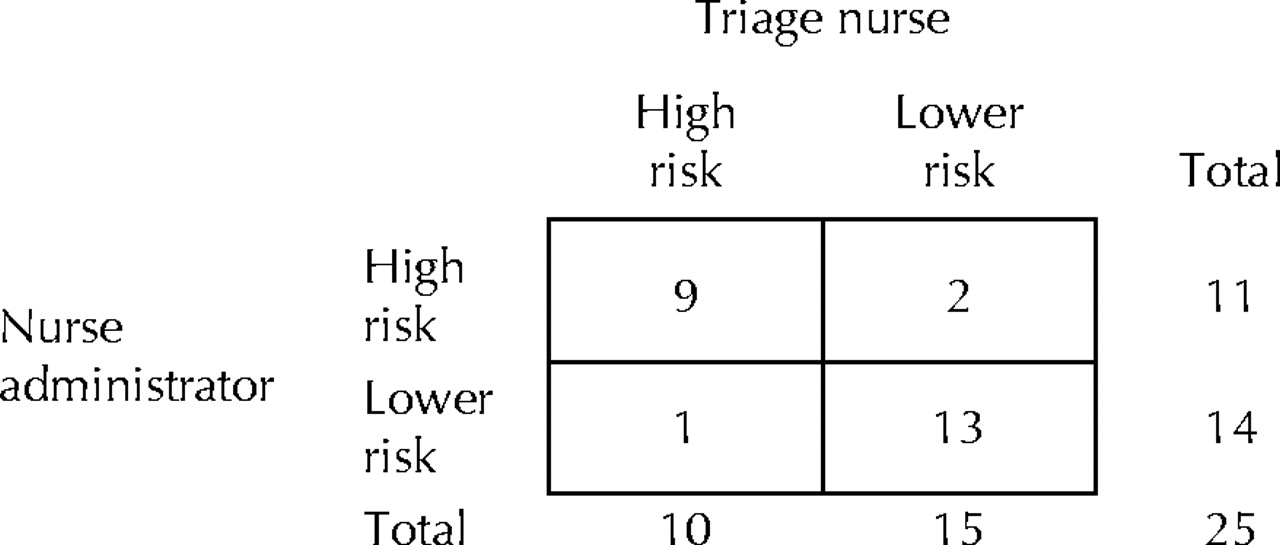

![PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/6d3768fde2a9dbf78644f0a817d4470c836e60b7/4-Table3-1.png)

PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar

242-2009: More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters